AI comments under an AI generated article being inflated by AI account activity. Who even needs end users anymore? Its a perfectly autonomous system.

Who even needs end users anymore?

Advertisers do, and if they ever wise up spez’s house of cards will crumble.

Advertisers don’t need end users. They just need happy bosses willing to cover their salaries.

In that sense, the business marketing team and the Reddit “look at our bullshit numbers” team are on the same side of the field.

It’s easy to bullshit engagement, it’s tougher to bullshit click-through rates and sale conversion metrics. It will take time to identify patterns, but inevitably the data will begin to reflect the truth, that’s when advertisers will break and move their money to more successful (organic) platforms.

At least this is what happens in a sane world, as for our reality who knows.

Yep. As long as sales and marketing can point to some bullshit KPI metrics as having exceeded all their goals, they act like they are the ones who bring in the profit.

Nevermind no one is buying anything and no traffic is going to the website, that is a different profit center’s problem and certainly not the fault of the MBA losers.

Ironically there used to be a subreddit for this.

It was intentionally meant to be view-only for humans, and the bots within it were named for and trained on other subreddits. So you have AdviceAnimalsBot, LinuxBot, GamingBot, AskRedditBot, GoneWildBot, etc. They would post on a rotation, emulating what users in their respective subreddits posted. They would all comment on each other’s posts, emulating their respective subreddit’s comments.

As an experiment it was actually really cool and fun to read through. It was also very clear that these were bots and you could identify which was which, and nothing was pretending to be a human for karma (there were no votes in the subreddit).

You mean r/SubredditSimulator? I miss that place, it was funny as hell.

Dead internet might be true

Something that nobody mentions when they talk about dead internet theory that I think should be talked about is that the worthless engagement trolls that are real people, really do not and should not count as real people. They’re never going to give you worthwhile responses, they don’t add anything to conversations. Unless they see an opportunity to pick a fight they’re probably not even listening to you.

Therefore they’re not really any different than an AI run account that’s not going to acknowledge you. And in some ways they are much worse, despite being technically “real people”.

If lemmy becomes just a bit more popular, the same thing will happen

Yep, and even worse. Lemmy has absolutely NO controls for quality and minimal moderation tools or capabilities. It’s in a much worse position than Reddit.

If it’s not already happening (And I think it is), it will.

What tools are missing to moderate a LLM bot?

I am a mod, I receive a report from an user, check it and ban. All done with current tools we have.

This doesn’t scale.

This is how we did in reddit too.

I receive a report from an user, check it and ban

That’s all manual effort currently. Someone has to report a problematic post, you have to manually look at it, manually decided if it should be removed, and manually remove it. I’m not the biggest fan of automatically removing content, but when someone posts 500 posts all at once that manual effort is a pain.

This is how we did in reddit too.

Is it? Or is one person claiming that?

Have a look yourself at the comment

I can’t exactly put my finger on it, but every god damn thing ChatGPT spits out has the same cadence or structure. Something about how it lays out points and wraps it up, and its usage of commas, is so noticeable to me.

To me, ChatGPT always sounds like a high school freshman submitting a half-assed English paper.

Hmm. As an overuser of commas myself, I better try to tone that down.

I wouldn’t say it’s necessarily overuse of commas, it’s how it consistently uses them the same way in nearly every response. It’s something about the overall structure of what it spits out. I wish a linguist would pop in and break it down for me. It’s a combination of tone, tense, arrangement of the sentences, how the paragraphs are put together - all of it feels very samey to me, unless it’s prompted otherwise.

There’s only a few “styles” of sentences it spits out and to me, it seems quite obvious. Humans don’t write that consistently all the time, they’re messier.

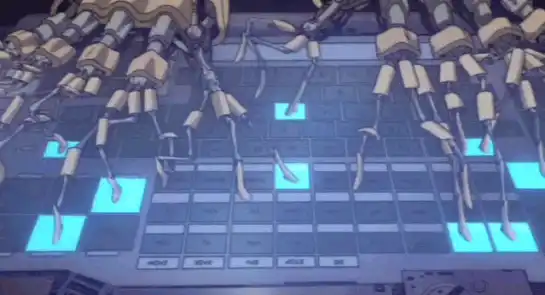

If you look closely you can tell it was typed with 6-finger hands