cross-posted from: https://lemmy.zip/post/24335357

Let’s hope Ea-nāṣir’s code is better than his copper.

I hear it’s prone to Rust.

Thank you for this cursed knowledge.

May I introduce you to emojicode…

Am I blind? I can’t see where 👀 is defined.

A little LESS chaotically, you can use emojis to name objects in Blender now… Which, I dunno, could be kinda fun in the right doses.

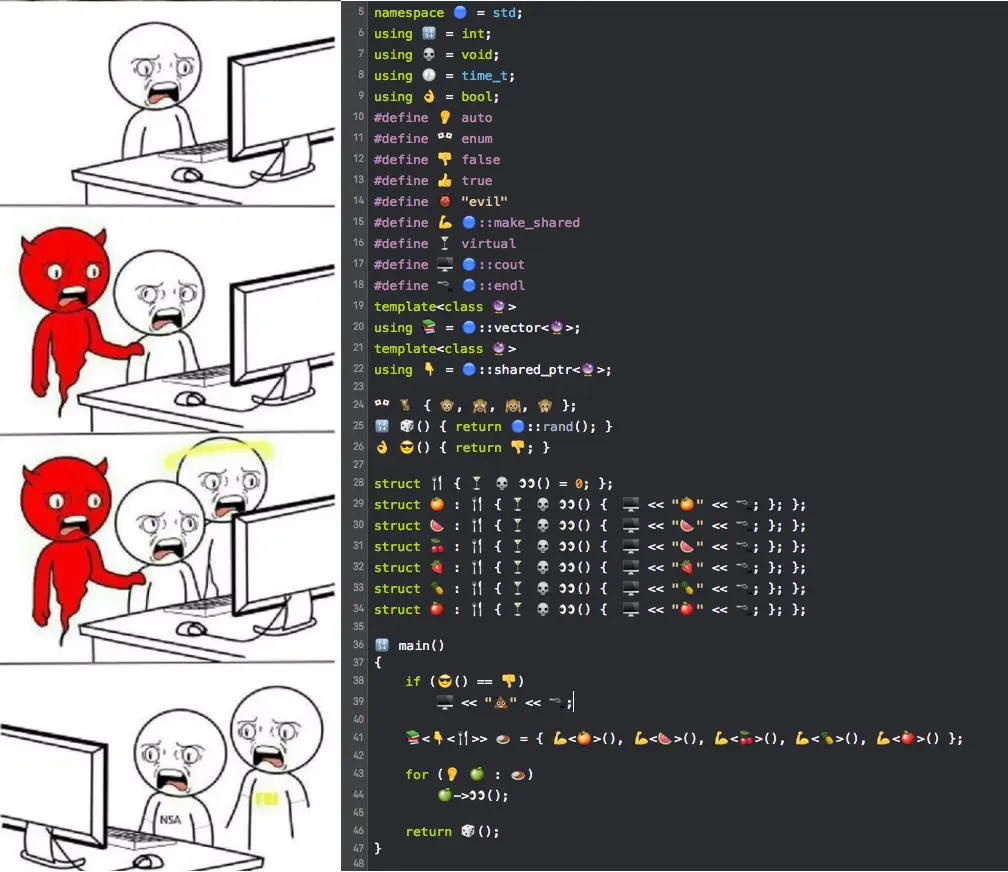

This picture had me progressively laughing harder as it progressed though LOL.

Isn’t it all unicode at the end of the day, so it supports anything unicode supports? Or am I off base?

Ssh! 🫢 You’ll ruin the joke!

Yes, but the language/compiler defines which characters are allowed in variable names.

I thought the most mode sane and modern language use the unicode block identification to determine something can be used in valid identifier or not. Like all the ‘numeric’ unicode characters can’t be at the beginning of identifier similar to how it can’t have ‘3var’.

So once your programming language supports unicode, it automatically will support any unicode language that has those particular blocks.

Sanity is subjective here. There are reasons to disallow non-ASCII characters, for example to prevent identical-looking characters from causing sneaky bugs in the code, like this but unintentional: https://en.wikipedia.org/wiki/IDN_homograph_attack (and yes, don’t you worry, this absolutely can happen unintentionally).

OCaml’s old m17n compiler plugin solved this by requiring you pick one block per ‘word’ & you can only switch to another block if separated by an underscore. As such you can do

print_แมวbut you couldn’t dopℝint_c∀t. This is a totally reasonable solution.

Unironically awesome. You can debate if it hurts the ability to contribute to a project, but folks should be allowed to express themselves in the language they choose & not be forced into ASCII or English. Where I live, English & Romantic languages are not the norm & there are few programmers since English is seen as a perquisite which is a massive loss for accessibility.

The hotter take: languages like APL, BQN, & Uiua had it right building on symbols (like we did in math class) for abstract ideas & operations inside the language, where you can choose to name the variables whatever makes sense to you & your audience.

Yeah. Tbh, I always wondered why programming languages weren’t translated.

I know CS is all about english, but at least the default builtin functions of programming languages could get translated (as well as APIs that care about themselves).

Like, I can’t say I don’t like it this way (since I’m a native english speaker), but I still wonder what if you could translate code.

Variables could cause problems (more work with translation or hard to understand if not translated). But still - programming languages have no declentions and syntax is simpler so it shouldn’t even compare to “real” languages with regards to difficulty of implementation.

Excel functions are translated. This leads to being pretty much locked out of any support beyond documentation if your system language isn’t English.

Programs aren’t written by a single team of developers that speak the same language. You’d be calling a library by a Hungarian with additions from an Indian in a framework developed by Germans based on original work by Mexicans.

If no-one were forcing all of them to use English by only allowing English keywords, they’d name their variables and functions in their local language and cause mayhem to readability.

[Edit:] Even with all keywords being forced to English, there’s often half-localized code.

I can’t find the source right now, but I strongly believe that Steve McConnell has a section in one of his books where he quotes a function commented in French and asks, “Can you tell the pitfall the author is warning you about? It’s something about a NullPointerException”. McConnell then advises against local languages even in comments

Thanks, I hate it

Ea Nasir over here selling subpar code now

Most languages are like this. Even C is like this.

Security by

ObscurityAntiquitynow that’s job security